1.3 How to see the distances between points

Because both types of clouds—row-points and column-points—are located in high dimensional spaces, we cannot observe them directly. The essence of Principal Component Analysis involves searching for a plane on which we project the cloud of points in such a way that the obtained configuration is as close as possible to the original configuration of the cloud in the high-dimensional space. We call this plane the factorial plane.

The way in which we obtain the desired plane, is by making the overall distances between projected points as close as possible to the real distances between points in the space of origin.

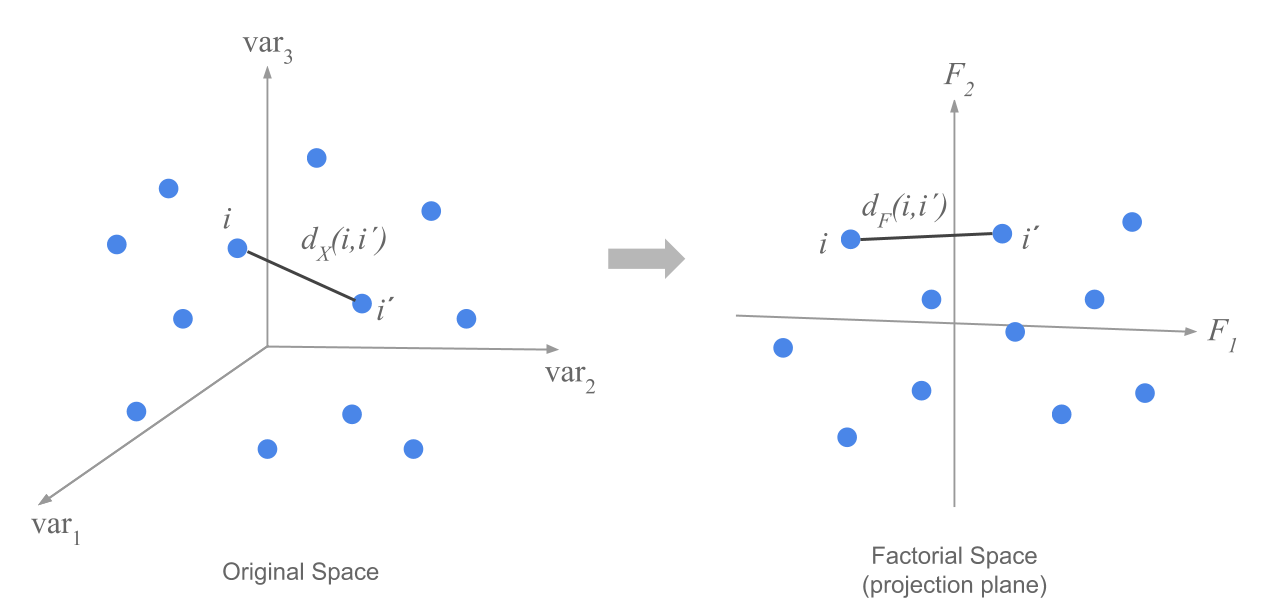

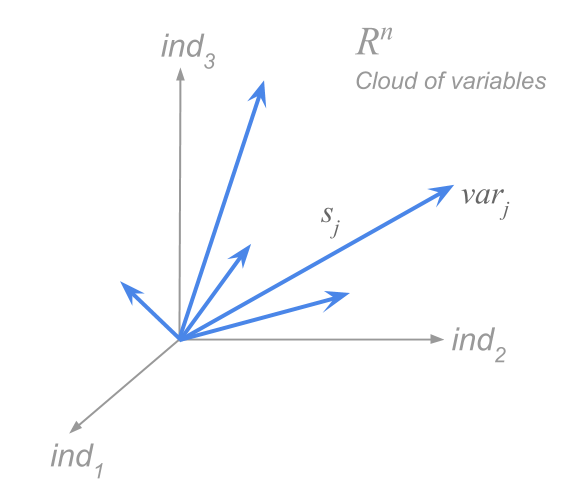

Let’s consider in first place the cloud of \(n\) individual-points located in the space where each axis corresponds to a variable. The following figure depicts this idea when we have only three variables.

Figure 1.5: Cloud of row-points in first factorial plane

The problem consists of finding the factorial plane such that the set of of all pairs of distances \(d_F(i,i')\) between points, is as close as possible to the real distances \(d_X(i,i')\) measured in the space of origin.

1.3.1 How to find the projection planes

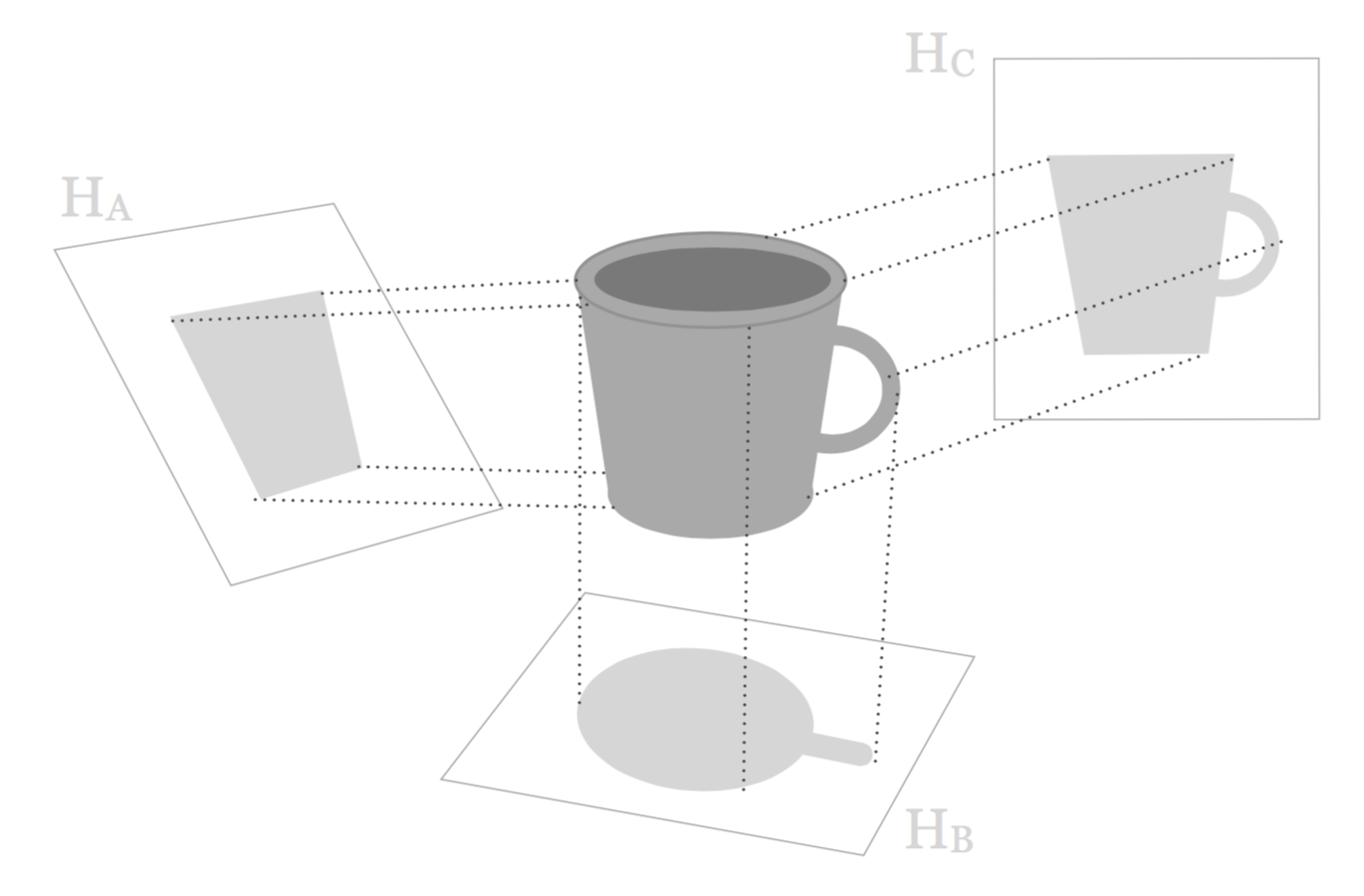

Our goal has to do with finding a subspace of reduced dimension that conserves tha maximum of information from the original configuration of the cloud. For instance, let’s pretend that the original cloud has the shape of a mug, like in the following figure:

Furthermore, let’s assume that we can only observe the projection of the mug on a plane of reduced dimension. The question is: Which plane should we choose?

Figure 1.6: Three projections of a mug-shaped cloud points

We can consider of projecting this mug cloud over different planes. As you can tell, the projection on the plane \(H_A\) is much more informative that the projection on the plane \(H_B\). At least we can see that the figure on \(H_A\) has to do with a lengthened object, and that one of its ends is wider than the other end. In contrast, all the points of the projected cloud on the plane \(H_B\) are confounded, and it does not convey a clear idea the original cloud, except for the shadow of the handle. However, the best projection among the three planes is that of \(H_C\).

We obtain the plane \(H_C\) by searching for the plane that makes the dispersion of the projected points as large as possible:

\[ Max_H \sum_i \sum_{i'} d^{2}_{H} (i, i') \tag{1.2} \]

where \(H\) represents the subspace of the projection.

Searching for the maximum can be written as:

\[ Max_H \sum_i \sum_{i'} d^{2}_{H} (i, i') = Max_H \left \{ 2n \sum_i d^{2}_{H} (i, G) \right \} \tag{1.3} \]

The problem of preserving the projected distances between all pairs of points becomes a problem of preserving the distances between each point and the center of gravity \(G\).

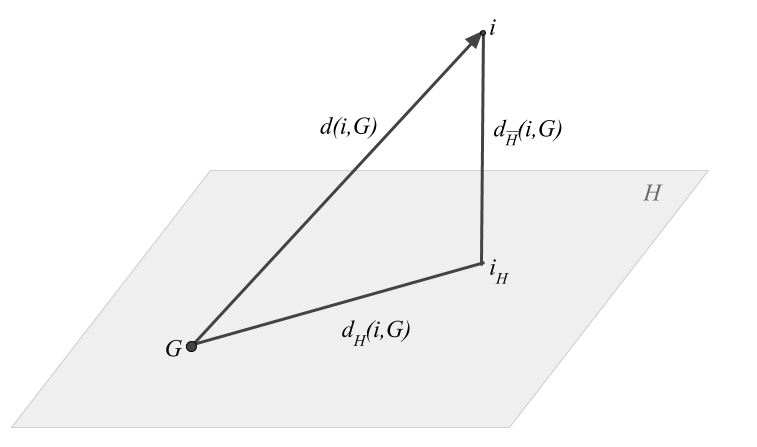

Figure 1.7: Decomposition of the distance between a row-point and the center of gravity

The formula in equation (1.4) is actually an expression for the Pythagorean theorem. The spread of the cloud of points can be decomposed into two terms: the spread in the projection plane, and another (orthogonal) term given by the sum of the distances of the points to the projection plane:

\[ \sum_{i} d^{2} (i, G) = \sum_i d^{2}_{H} (i, G) + \sum_i d^{2}_{\bar{H}} (i, G) \tag{1.4} \]

In this way, the projection plane that guarantees the maximum dispersion between the points, is also the plane that gets as close as possible to the original cloud (in the sense of least squares criterion). This is expressed in the following relation (1.5)

\[ Max \hspace{2mm} \sum_{i} d^{2}_{H} (i, G) \quad \Longleftrightarrow \quad Min \hspace{2mm} d^{2}_{\bar{H}} (i, G) \tag{1.5} \]

1.3.2 How to take into account the importance of individuals

Sometimes we may be interested in assigning weights to the individuals based on their relative importance or relevance. When all the individuals have the same importance, we can give a weight equal to \(1/n\) to each of them. Thus, the fit criterion becomes:

\[ Max \hspace{2mm} \sum_{i} \frac{1}{n} d^{2}_{H} (i, G) = Max \hspace{2mm} \frac{\sum_i (x_{iH} - \bar{x}_H)^2}{n} \tag{1.6} \]

In the general case where each individual has its own weight \(p_i\) with \(\sum_i p_i = 1\), then the fit criterion is expressed as:

\[ Max \hspace{2mm} \sum_{i} p_i \hspace{1mm} d^{2}_{H} (i, G) \tag{1.7} \]

The product of the weight of a point, \(p_i\), times the squared of its distance to the center of gravity, \(d^{2}_{H} (i, G)\), is known as inertia of the point. In this case, the problem involves looking for the projection plane that maximizes the projected inertia.

1.3.3 Inertia Decomposition

The total inertia is defined as:

\[ I = \sum_{i=1}^{n} p_i \hspace{1mm} d^2(i,G) \tag{1.8} \]

The total inertia can be broken down into two additive terms:

- projected inertia on a subspace \(H\)

- inertia orthogonally projected on a subspace \(\bar{H}\)

\[ I = I_H + I_{\bar{H}} \tag{1.9} \]

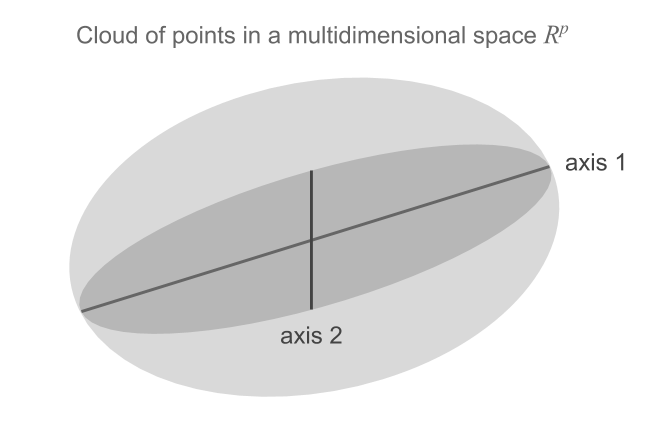

The problem of searching for the subspace that makes the dispersion of the projected points as large as possible can also be put in terms of inertias. Namely, we look for a plane \(H\) that maximizes the projected inertia (see figure below).

Figure 1.8: Successive directions of maximum inertia

In order to find the optimal subspace, we begin by looking for a one-dimensional space (i.e. a line) of maximum projected inertia. If all individuals have the same weight, then this first subspace coincides with the direction of maximum stretch of the cloud.

Having found the first one-dimensional subspace, the next step involves finding a two-dimensional subspace (i.e. a plane) with maximum projected inertia. Then, we look for a three-dimensional space, and so on and so forth. At each step, we look for a higher dimensional space such that the projected inertia is as large as possible.

It can be proved that the two-dimensional plane must contain the one-dimensional space (i.e. the line) of maximum projected inertia. Having found the first direction of maximum inertia, one needs to find another line, orthogonal to the first one, such that the plane formed by them has maximum inertia. Analogously, the subspace of three dimensions is formed with the two-dimensional space by adding an orthogonal direction to this plane (see figure 1.8).

Because the inertia is additive in orthogonal directions, PCA involves the decomposition of the total inertia in \(p\) additive components, as many as the number of dimensions in the original space.

\[ I = I_1 + I_2 + \dots + I_p \tag{1.10} \]

Interestingly, the inertias (the dispersions) are decreasingly ordered, which means that subsequent orthogonal directions become smaller, that is, \(I_1 \geq I_2 \geq \dots \geq I_p\).

This implies that the configuration of the projected cloud on the first fatorial plane is as close as possible (in the least squares sense) to the original configuration.

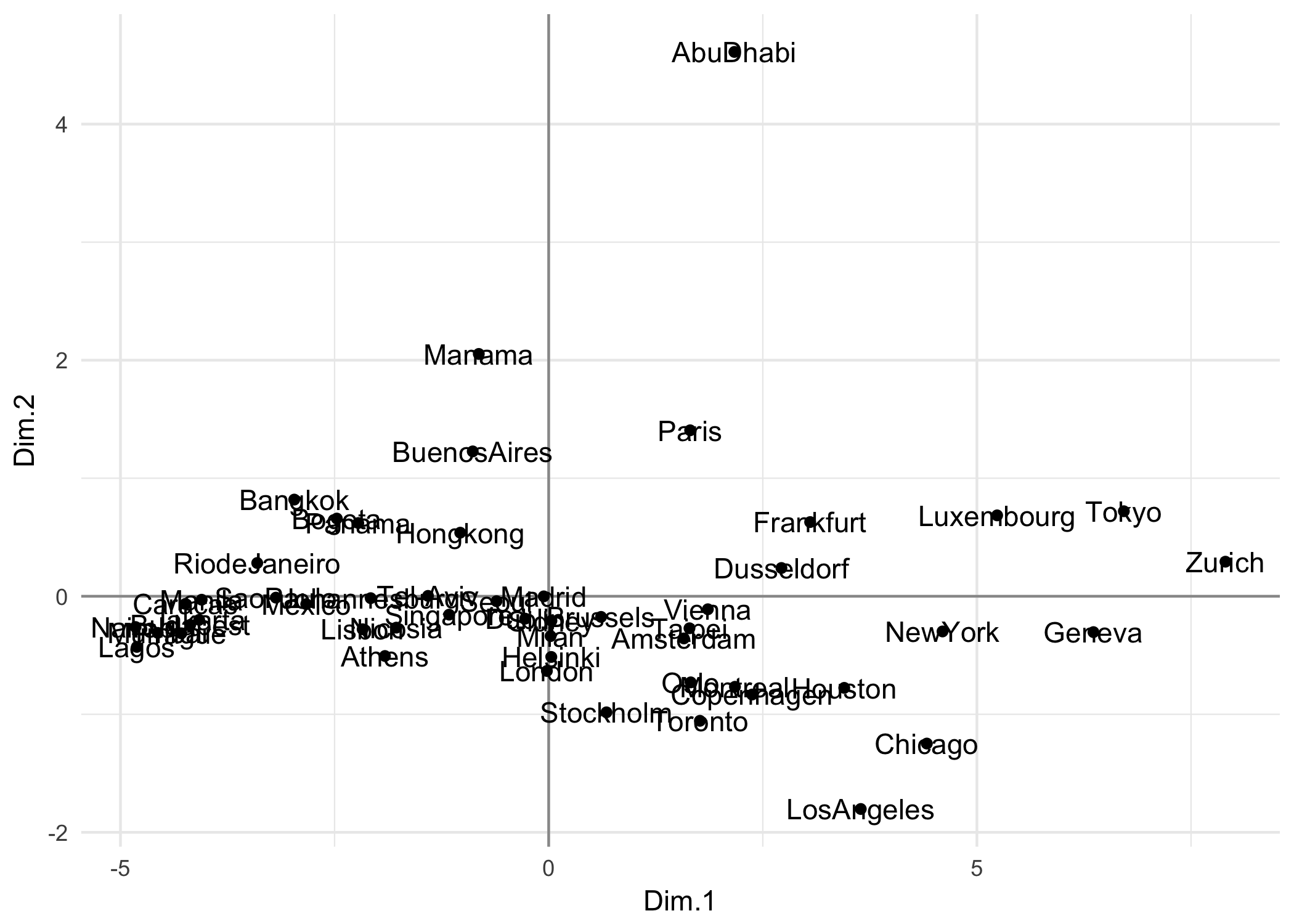

For example, the figure below shows the plane of maximum projected inertia for in the data of the Cities.

Figure 1.9: Cloud of variable-points in the standardized PCA

This is the representation of the cities, in a factorial plane, that best resembles the existing distances between the cities according to the salary level. For instance, we can tell that Tokyo, Zurich and Geneva are close to each other, indicating similar salaries among the analyzed professions. The same can be said about Manila, Mumbai, Lagos, and Prague; these cities have similar salaries among them, but they have a contrasting difference with the former group (Tokyo, Zurich, and Geneva).

We can also assume a certain similarity between northen cities such as Stockholm, and Copenhagen, whereas there seems to be a substantial salary difference between Paris and American cities (Los Angeles, Chicago, Toronto, Houston).

The center of the graph represents the average point of the cloud. This corresponds to cities with salaries closer to the mean values.

Notice the difference in scale utilized in the x-axis and the y-axis. Actually, the cloud of points is very extended along the x-axis \(F_1\).

1.3.4 Visualizing association between variables.

Let’s discuss what the analysis involves regarding the cloud of variable-points. Recall that the cloud of variable-points is defined by the columns of the data matrix \(\mathbf{X}\). Without loss of generality, we will assume that the variables are mean-centered and normalized by the standard deviation.

The first thing to do is to define a measure of distance between variables. One way to represent a certain notion of proximity among variables is given by the following formula:

\[ d^2(j, j') = 2 (1 - cor(j, j')) \tag{1.11} \]

If two variables \(j\) and \(j'\) measure the same thing (in the sense of having a linear correlation of 1) then their points will be overlapped.

In the case where two variables \(j\) and \(j'\) have a linear correlation equal to -1 (when one increases, the other decreases), the two variable-points will have a maximum distance in opposite directions.

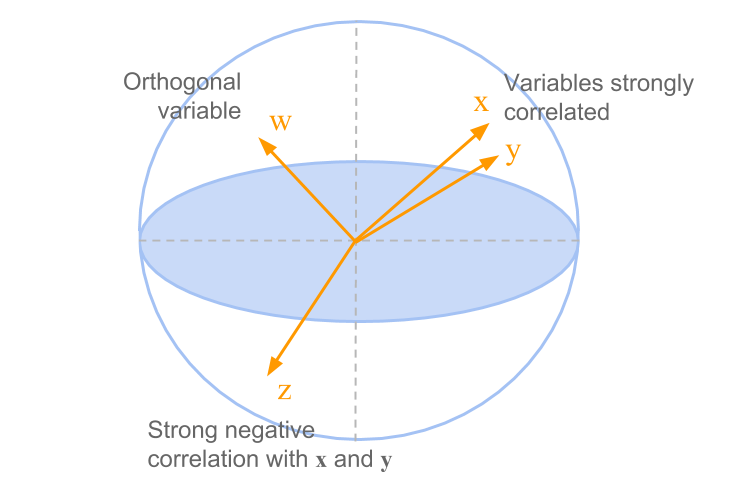

When one variable does not provide any information about the other one, we have a situation when their correlation coefficient will be zero. This would correspond to an intermediate distance in which the variables form an orthogonal angle. These cases are illustrated in figure 1.10.

Figure 1.10: Cloud of variable-points in the standardized PCA

If the correlation is equal to 1, the squared distance is zero. Likewise, if the correlation is -1 the distance is 4, and if the correlation is zero then the distance is 2. This formulae define the so-called standardized Principal Components Analysis.

It can be shown that all the variable-points are located within a one-unit distance from the origin (inside a hypersphere of radius 1). The cloud of variable-points is defined by a set of vectors that start from the center of the sphere. The correlation coefficient between two variables coindices with the cosine of the angle formed by two corresponding vectors (associated to the variables).

Two variables that are close to each other will form a small angle, which corresponds to a large correlation coefficient. Two independent variables will have a zero correlation coefficient, thus forming a square angle between them. In turn, two opposed variables will have a correlation coefficient close to -1 and thus will appear on two opposite locations in the sphere.

Analogously to the cloud of row-points, we also seek to find a projection plane providing the largest amount of information about the associations between the variables. More precisely, we look for a plane that provides information about the angles between variables, that is, about their correlations.

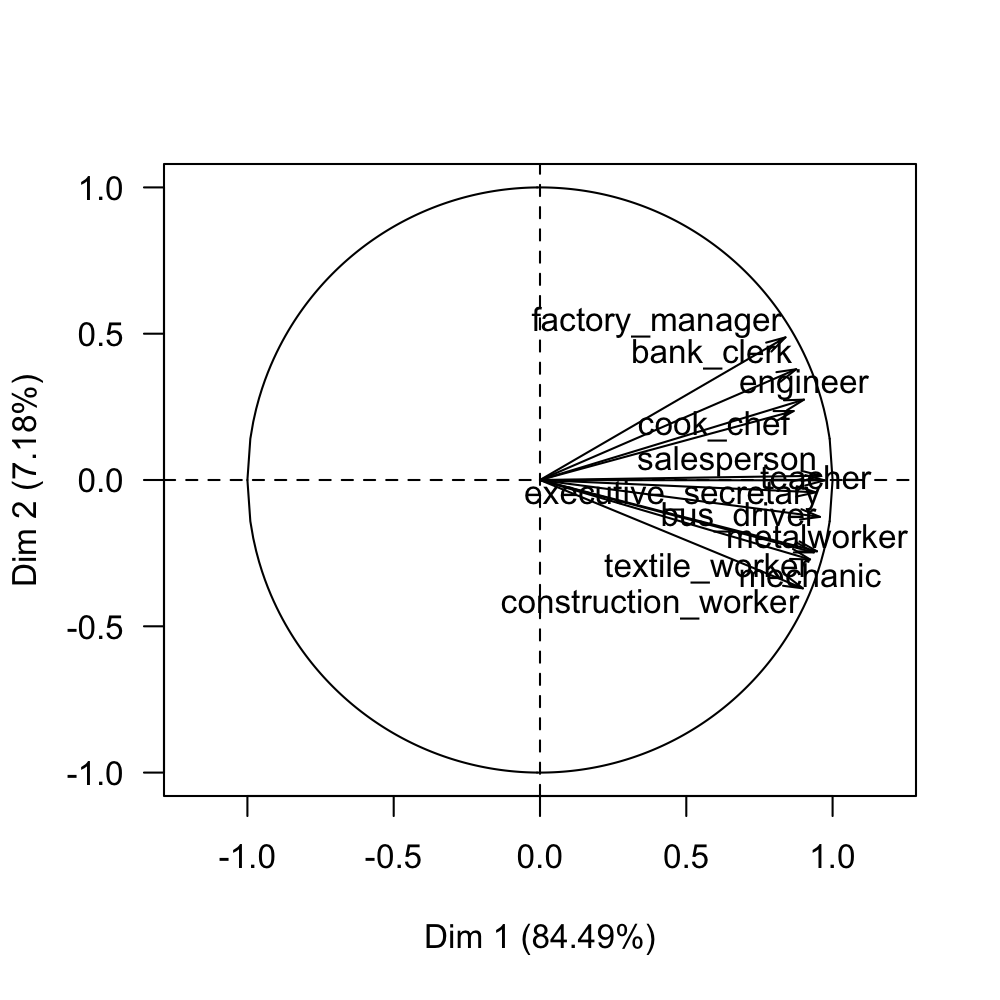

In the case of the 12 variables observed on the 51 cities, we obtain the configuration on the first factorial plane, displayed in figure 1.11.

Figure 1.11: Circle of correlations on the first factorial plane

Notice that in this case all the variables are positively correlated. Moreover, from the circle of correlations, it is possible to observe two groups of variables. One group is formed by departmental_head, engineers, bank_clerk, and cook_chef. The other group is formed by the rest of the professions.

In the matrix of correlations (see table 1.1) we can see the magnitude of the association between the variables. As you can tell, all the correlations are positive and with large value tanging from 0.59 to 0.96.

| tea | bus | mec | con | met | coo | dep | eng | ban | exe | sal | tex | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| tea | 1.00 | 0.96 | 0.84 | 0.83 | 0.91 | 0.75 | 0.78 | 0.81 | 0.82 | 0.92 | 0.88 | 0.88 |

| bus | 0.96 | 1.00 | 0.89 | 0.88 | 0.94 | 0.76 | 0.74 | 0.82 | 0.80 | 0.93 | 0.89 | 0.92 |

| mec | 0.84 | 0.89 | 1.00 | 0.95 | 0.93 | 0.80 | 0.64 | 0.74 | 0.70 | 0.88 | 0.89 | 0.89 |

| con | 0.83 | 0.88 | 0.95 | 1.00 | 0.93 | 0.72 | 0.59 | 0.70 | 0.64 | 0.86 | 0.86 | 0.92 |

| met | 0.91 | 0.94 | 0.93 | 0.93 | 1.00 | 0.76 | 0.69 | 0.80 | 0.72 | 0.92 | 0.88 | 0.94 |

| coo | 0.75 | 0.76 | 0.80 | 0.72 | 0.76 | 1.00 | 0.82 | 0.82 | 0.79 | 0.80 | 0.85 | 0.71 |

| dep | 0.78 | 0.74 | 0.64 | 0.59 | 0.69 | 0.82 | 1.00 | 0.87 | 0.89 | 0.80 | 0.79 | 0.65 |

| eng | 0.81 | 0.82 | 0.74 | 0.70 | 0.80 | 0.82 | 0.87 | 1.00 | 0.85 | 0.87 | 0.85 | 0.81 |

| ban | 0.82 | 0.80 | 0.70 | 0.64 | 0.72 | 0.79 | 0.89 | 0.85 | 1.00 | 0.87 | 0.85 | 0.73 |

| exe | 0.92 | 0.93 | 0.88 | 0.86 | 0.92 | 0.80 | 0.80 | 0.87 | 0.87 | 1.00 | 0.94 | 0.93 |

| sal | 0.88 | 0.89 | 0.89 | 0.86 | 0.88 | 0.85 | 0.79 | 0.85 | 0.85 | 0.94 | 1.00 | 0.89 |

| tex | 0.88 | 0.92 | 0.89 | 0.92 | 0.94 | 0.71 | 0.65 | 0.81 | 0.73 | 0.93 | 0.89 | 1.00 |

The fact that all the variables are positively correlated implies that if one type of salary in a city is high, then the other salaries in that city will also be high.

In later sections of the book we will emphasize the idea that Principal Component Analysis can be approached from the point of view of the variable-points having a distance based on the correlation between the variables. In other words, a PCA on the row-points is not an independent analysis from a PCA on the variable-points. Quite the opposite in fact, it is possible to obtain the results of a PCA on a set of points (e.g. variables) given the results of the other set (e.g. the rows). This, as we’ll see, provides a set of rules extremely valuable in the interpretation of results.

1.3.5 Normalized PCA or non-normalized PCA?

As we’ve seen, Principal Component Analysis consists of decomposing the inertia of the original cloud, based on some orthogonal directions. Each of the obtained orthodonal directions accounts for a proportion of the original inertia.

But, what is the contribution of each variable to the original inertia?

The distance between variable-points defined in equation (1.11) implies that the contribution, of each variable, to the total inertia is the same for all variables, equal to \(1/p\).

The inertia of the cloud of variable-points with respect to the origin coincides with the number of active variables.

\[ I_T = \sum_{j=1}^{p} d^2(j, O) = p \tag{1.12} \]

In order for equation (1.12) to make sense, we need to use standardized values (mean-centered and unit-variance):

\[ z_{ij} = \frac{x_{ij} - \bar{x}_j}{s_j} \tag{1.13} \]

where \(\bar{x}_j\) is the mean of variable \(j\) and \(s_j\) is the corresponding standard deviation. In this case we talk about Normalized Principal Component Analysis.

With standardized data, the distance of each variable and the origin is equal to 1:

\[ d^2(j, O) = \sum_{j=1}^{n} \frac{1}{n} \left ( \frac{x_{ij} - \bar{x}_j}{s_j} \right )^2 = \frac{\frac{1}{n} \sum_j (x_{ij} - \bar{x}_j)^2}{s_{j}^{2}} = 1 \tag{1.14} \]

We should mention that the use of Normalized PCA is not always justified. For example, if a PCA is performed by an analyst working for a bank, if may be more interesting to assign a larger weight to the products that contribute to the volume of the deposits. That is, the importance given to different variables should be done by taking into account the goal of the analysis.

If we use the raw data (i.e. mean-centered only, no scaling by the standard deviation), the squared distance of one variable to the origin is given by:

\[ d^2(j,O) = \sum_{j=1}^{n} \frac{1}{n} (x_{ij} - \bar{x}_j)^2 = var(j) \tag{1.15} \]

With non-normalized data, the variables are no longer found inside a sphere of radious 1. Instead, the length of the segment (i.e. the vector) of each variable is equal to its standard deviation. We can then think of the cloud of variable-points as a set of vectors, each one of length equal to the standard deviation of the variable, and forming angles defined by the correlation coefficient between the variables.

This type of analysis is called Non-Normalized Principal Component Analysis.

With this type of PCA, the distance between two variables depends on the correlation (i.e. the angle between them), as well as on their variances.

Figure 1.12: Cloud of variable-points in a non-normalized PCA

The total inertia of the cloud of variable-points is given by the sum of the variances of each variable:

\[ I_T = \sum_{j=1}^{p} d^2(j,O) = \sum_{j=1}^{p} var(j) \tag{1.16} \]

The contribution of each variable to the total inertia is given by:

\[ ctr_j = \frac{var(j)}{\sum_j var(j)} \tag{1.17} \]

The variance is a function of the unit of measurement in which a variable is measured. This provides a valuable degree of freedom to tune the importance of each variable in the analysis.

In practice, it is preferable to assigne the same importance to all the variables. This is a requirement for when the active variables have different units of measurement (e.g. euros, grams, meters, etc).

In our working example with the data about salaries in various cities, the summary statistics for the active variables are displayed in the following table 1.2

| weight | mean | stdev | min | max | |

|---|---|---|---|---|---|

| teacher | 51 | 16801.96 | 13375.19 | 600 | 56800 |

| bus_driver | 51 | 14311.76 | 10927.24 | 400 | 46100 |

| mechanic | 51 | 12384.31 | 8605.61 | 700 | 30500 |

| construction_worker | 51 | 10343.14 | 8321.81 | 200 | 28000 |

| metalworker | 51 | 15145.10 | 10346.23 | 800 | 38700 |

| cook_chef | 51 | 15615.69 | 8855.67 | 500 | 33900 |

| factory_manager | 51 | 30933.33 | 21462.03 | 1500 | 95000 |

| engineer | 51 | 24664.71 | 14158.57 | 1600 | 59700 |

| bank_clerk | 51 | 18749.02 | 13547.30 | 1200 | 58800 |

| executive_secretary | 51 | 13311.76 | 7645.12 | 1400 | 31500 |

| salesperson | 51 | 9658.82 | 6124.87 | 400 | 24700 |

| textile_worker | 51 | 9247.06 | 6493.76 | 300 | 23800 |

From the table above, we see that professions factory manager and engineer are the ones that have, on average, the higher salaries. Then we have bank clerk, teacher, cook chef, and metalworker. And in the last two places there is salesperson and textile worker, which are considered to be low-skills professions mostly performed by women.

Because all active variables are measured in the same unit (in dollars), we could carry out a non-normalized PCA. However, if we did this type of analysis, it would imply giving more importance to those professions with higher salary. Why? Because these are the variables that have a larger spread. If our goal is to compare the cities by giving the same importance to all professions, then it is more suitable to apply a normalized PCA. This, in turn, let us focus on the matrix of correlations among the active variables.

Interestingly, both cloud of points—individuals and variables—have the same inertia. On one hand, the inertia of the cloud of row-points is the sum of the squared distances between each point and the center of gravity, weighed by the importance of each individual. This inertia can be expressed with respect to each axis in the original space (in which each axis corresponds to a variable):

\[ I_T = \sum_{i=1}^{n} \frac{1}{n} \sum_{j=1}^{p} (x_{ij} - \bar{x}_j)^2 = \sum_{j=1}^{p} \sum_{i=1}^{n} \frac{1}{n} (x_{ij} - \bar{x}_j)^2 = \sum_{j=1}^{p} var(j) \tag{1.18} \]

The variance along a given axis is the spread of the projected coud on that axis. Because the axes are orthogonal, the total inertia is equal to the sum of the variances of the variables. Therefore, the inertias of both clouds are the same.

1.3.6 Distance Matrices

The rows (i.e. the cities) are located in a space where we measure distance in the classic sense. In a normalized PCA we have:

\[ d^2(i, i') = \sum_{j=1}^{p} \left ( \frac{x_{ij} - x_{i'j}}{s_j} \right )^2 \tag{1.19} \]

And in the case of a non-normalized PCA:

\[ d^2(i, i') = \sum_{j=1}^{p} (x_{ij} - x_{i'j})^2 \tag{1.20} \]

In the cloud of variable-points, the distance is defined by the formula (1.11) when we are carrying out a normalized PCA. In the case of a non-normalized PCA then distance becomes:

\[ d^2(j, j') = var(j) + var(j') - 2 cov(j,j') \tag{1.21} \]

All these distances can be organized in square matrices: an \(n \times n\) distance matrix for the distances between individuals, and a \(p \times p\) matrix for the distances between variables.

If we don’t defined how to measure the distances between points, then the clouds of points remain undefined. In our approximation, we assume that the rows (the cities) are located in a metric (Euclidean) space, which means that the distance is measured by the classic formula of the sum of squared differences:

\[ d^2 (i, i') = \sum_{j=1}^{p} (x_{ij} - x_{i'j})^2 = (x_i - x_{i'}) \mathbf{I} (x_i - x_{i'}) \tag{1.22} \]

Notice that in this case, calculating the distance between two individuals takes the form of a scalar product, where the metric matrix is the identity matrix. This corresponds to a non-normalized PCA.

In contrast, when we carry out a normalized PCA, the distance between two individuals is measured by the formula:

\[ d^2(i, i') = \sum_{j=1}^{p} \left ( \frac{x_{ij} - x_{i'j}}{s_j} \right )^2 = (x_i - x_{i'}) \mathbf{S}^{-2} (x_i - x_{i'}) \tag{1.23} \]

where:

\[ \mathbf{S}^{-2} = \left(\begin{array}{cccc} \dots & 0 & 0 & 0 \\ 0 & 1/s_{j}^{2} & 0 & 0 \\ 0 & 0 & \dots & 0 \\ 0 & 0 & 0 & \dots \\ \end{array}\right) \]

In this case, the metric matrix is given by \(\mathbf{S}^{-2}\) (diagonal matrix of inverses of variable variances). Notice that all euclidean metrics can be expressed in a canonical form by using a change of coordinates. When using \(\mathbf{S}^{-2}\) such change of coordinates involves dividing the coordinates of the points by the standard deviation of each variable.

Regarding the cloud of variable-points, the distance between variables is defined based on the correlation between two variables. In general, this distance is given by the scalar product between two vectors:

\[ cor(j,j') = \sum_{i=1}^{n} \frac{1}{n} \left ( \frac{x_{ij} - \bar{x}_j}{s_j} \right ) \left ( \frac{x_{ij'} - \bar{x}_{j'}}{s_{j'}} \right ) = \mathbf{z_{j}^{\mathsf{T}} N z_j} \tag{1.24} \]

The natural metric matrix to be used in this case is the diagonal matrix \(\mathbf{N}\) of weights for individuals (\(1/n\) or \(p_i\)). Thus, the distance between variables is defined as:

\[ d^2(j,j') = <z_j, z_{j'}> + <z_j, z_{j'}> - 2<z_j, z_{j'}> \tag{1.25} \]

which coincides with (1.17) when using mean-centered data and standardized (normalized PCA), or with (1.16) when using non-centenred data (non-normalized PCA; (1.11) and (1.21)).